from your_project import load_model, train, predict

model = load_model('transformer')

model = train(model)

score = predict(model)

from your_project import load_model, train, predict, get_submodules

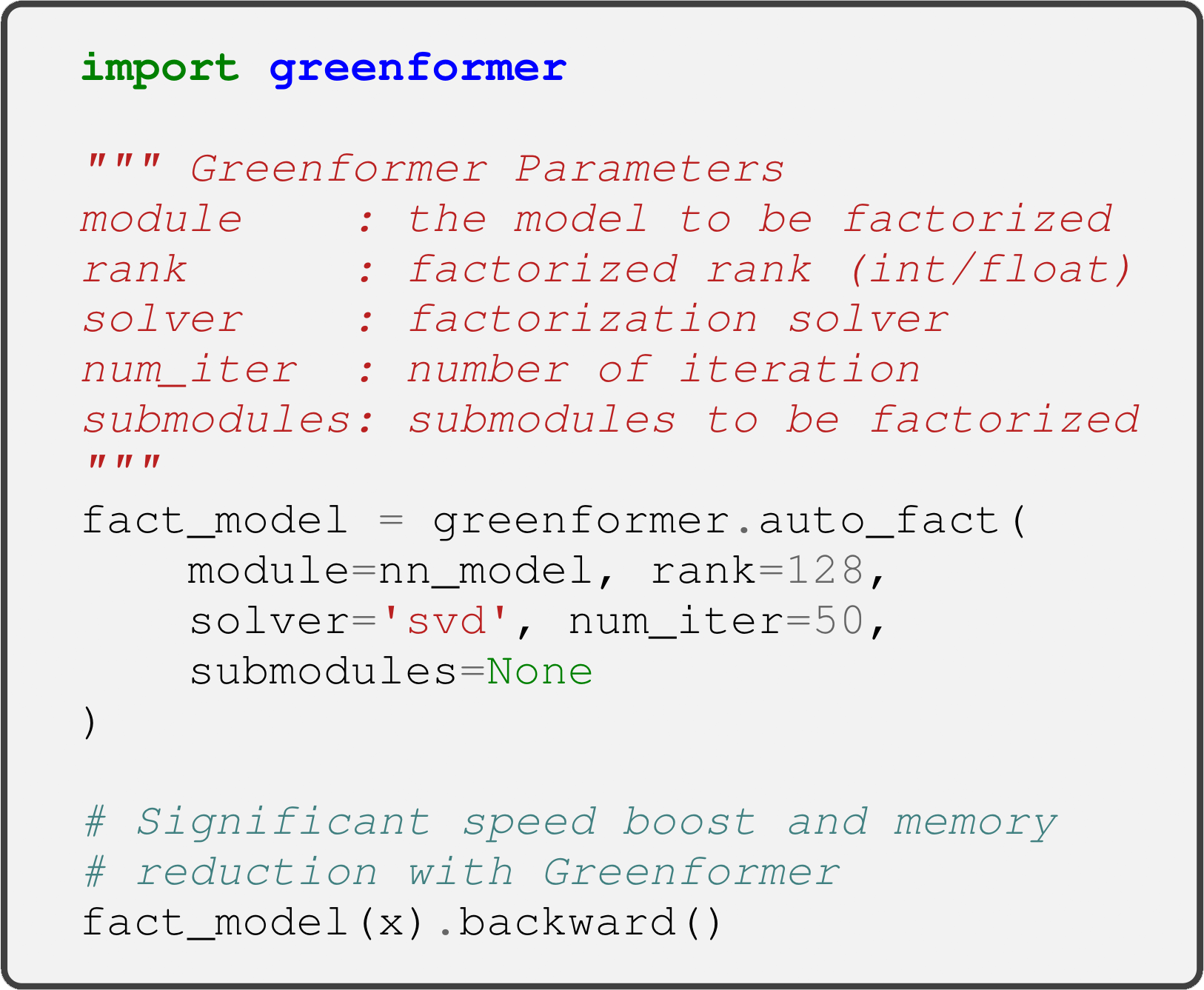

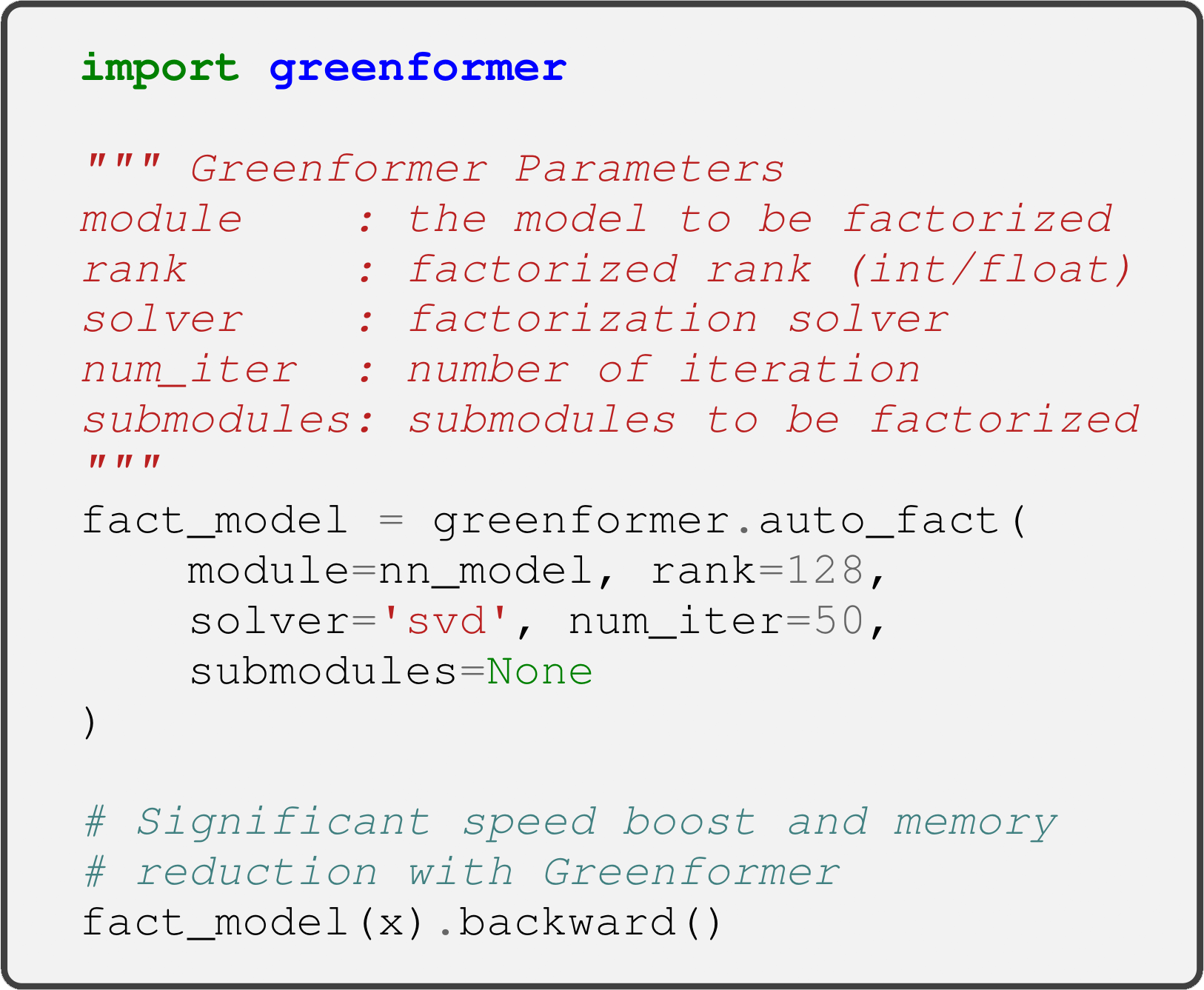

from greenformer import auto_fact

model = load_model('transformer')

model = auto_fact(module=model, rank=64, solver='random', num_iter=50, submodules=None)

model = train(model)

submodules = get_submodules(model,'bert-base')

model = auto_fact(module=model, rank=64, solver='svd', num_iter=50, submodules=None)

score = predict(model)

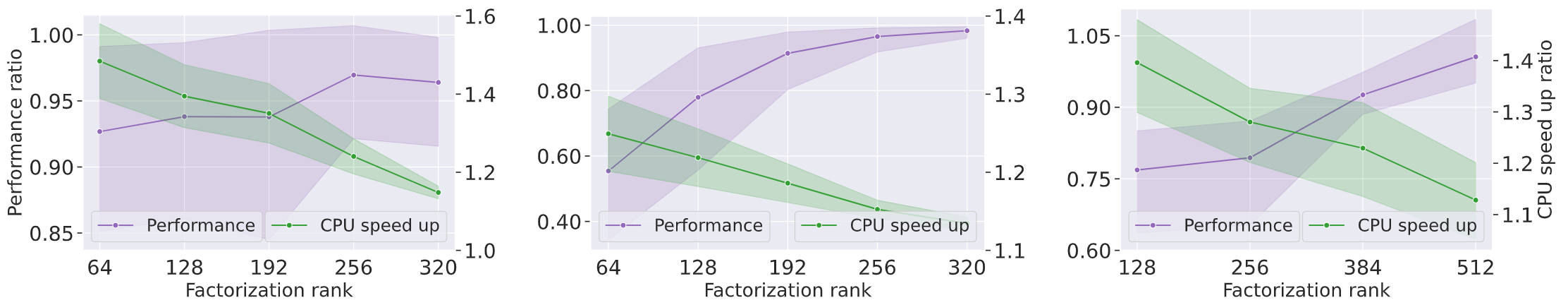

Faster and memory efficient training without sacrificing performance

Productionize a pre-trained model in a faster, lighter, and cheaper way

More efficient few-shot in-context learning using billions parameters models